DX Research Group: Building AI Driven Collaborative World Experiments

DX Research Group Team

Twitter/X | Website

Abstract

DX Research Group (DXRG) is a new lab dedicated to creating experiences and products powered by the intersection of Artificial Intelligence and cryptocurrency. Our goal is to expand the scope of what is possible within this framework across a wide range of domains, including entertainment, art, social networks, and more.

While the industry today is largely focused on two areas: improving models and productivity tools, we believe a critically important third area is being neglected: creative systems design. To accelerate this, DXRG will be agile, working to rapidly launch novel experiments directly to participants in the crypto space. As experiments prove successful, we will flexibly look to build upon them, open-source our work, or spin off projects into cooperatives or protocols.

Our first experiment, DX01: SINGULARITY, begins on Thursday, December 5, 2024. Follow our social channels for more information.

The technological acceleration of AI is inevitable. Now, creative acceleration must begin in earnest.

DXRG Logo

DXRG Logo

"It is at work everywhere, functioning smoothly at times, at other times in fits and starts… Everywhere it is machines — real ones, not figurative ones: machines driving other machines, machines being driven by other machines, with all the necessary couplings and connections."

— Gilles Deleuze, Anti-Oedipus

Background

Machine learning is the most impactful innovation of the 21st century. Neural networks enable the raw power of computation to create new structures and patterns from data across any imaginable domain. The surprising truth that adding scale can improve performance has led to the development of large language models, which are among the most impressive recent achievements.1 Scaling laws predict continued opportunity, driving a rush towards economic investment in artificial intelligence. The market, however, underestimates the structural changes that AI brings to society as a whole.

From the 1970s to the 2010s, the attention economy reigned. The major challenge to solve was the ability to process and make decisions amidst an influx of data and information.2 Likewise, consumer attention became the battleground for financial success. The market still thinks in the medium of attention without realizing the shift towards a new paradigm: the systems economy.

The systems economy embraces machine learning at its core: an assemblage of linear algebra, calculus, and raw computational power that can be applied to anything and everything. Generalized systems are applied to incomprehensible scales of data that produce meaningful tools and solve previously unsolvable problems. This paradigm is fuzzy and strange since it operates on scale, not smarts. Language models are 5000-dimensional autonomously assembled Rube Goldbergs, not elegant equations. Anything can be modeled. Speed and flexibility supplant accuracy and perfection. Not properly recognizing the shift towards this new economy hinders the exploration of new possibilities, particularly in areas like creative systems design.

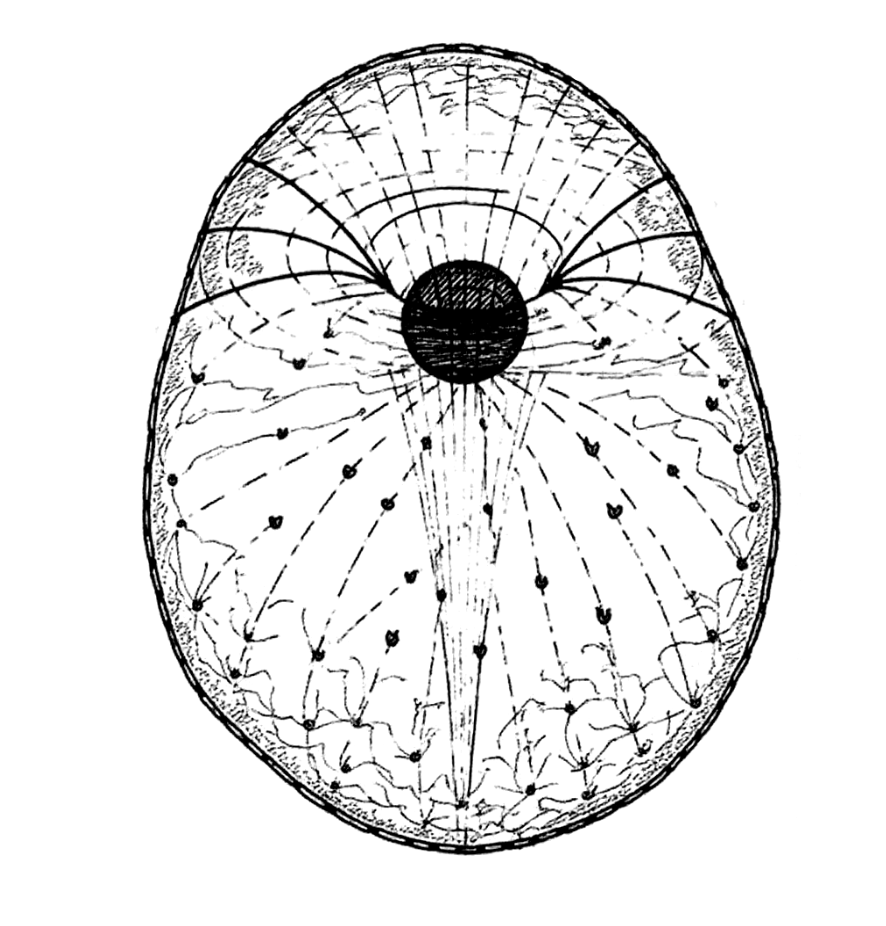

A fully autonomous Rube Goldberg agential water delivery system

A fully autonomous Rube Goldberg agential water delivery system

A fully autonomous Rube Goldberg agential water delivery system

A fully autonomous Rube Goldberg agential water delivery system

The corporation and its nimble cousin, the startup, assume vast resources will be required to accomplish its goals. Its aim is to scale capabilities, development, market share, and assets as rapidly as possible. In order to achieve this, it focuses on a singular product or vertical, assuming that the form of what customers want is well understood. The systems economy shatters these assumptions. Consider how many AI startups have been made irrelevant by simply adding a function to ChatGPT.3 This difference is amplified by the pseudo black box nature of AI's development. Until a system is developed and executed, one can never fully predict the outcome.

Today, critical progress in the systems economy has come in two forms: model development and productivity-enhancing tools. A third modality remains underdeveloped: experimentation in creative systems design. When everything can be creatively abstracted, the potential valid system designs are a nearly infinite field. Industry leaders present video generation models as a revolution in entertainment, focusing on lower production costs rather than what completely new experiences may be possible. For example, the ability to merely customize the ending of a movie dynamically feels inadequate in the face of a technology that can make new, creative abstractions between any systems.

What would an operating model that can embrace creative design within the systems world look like?

DX Research Group

The research group as nomadic war machine painting new abstractions onto the world

The research group as nomadic war machine4 painting new abstractions onto the world

The research group as nomadic war machine painting new abstractions onto the world

The research group as nomadic war machine4 painting new abstractions onto the world

To embrace creative design within the systems economy, we present DX Research Group (DXRG).5 DXRG is a lab for experiences and products powered by the intersection of AI and crypto, searching for new ways for people to interact with complex semi-autonomous systems. The goal is to experiment in public, producing findings that culturally accelerate ways of thinking about AI systems while having the potential to spin off products, protocols, or structures that are found to be compelling.

There are numerous predecessors of the research group form, many of which have had tremendous success in crypto. Uniswap Labs and Gnosis Ltd represent two strong examples of this form with agile, small private groups spinning off critically important independent products.6 One could argue OpenAI was founded with this intent as well.

As a recent example, we credit Nous Research as an AI organization that established itself non-hierarchically and has made significant contributions in all modalities of AI progress.7 Crypto and AI find themselves strange bedfellows not just because of an orientation towards the future, but also because of the ability for anyone to show up and contribute.8

The DXRG M-A-C Framework

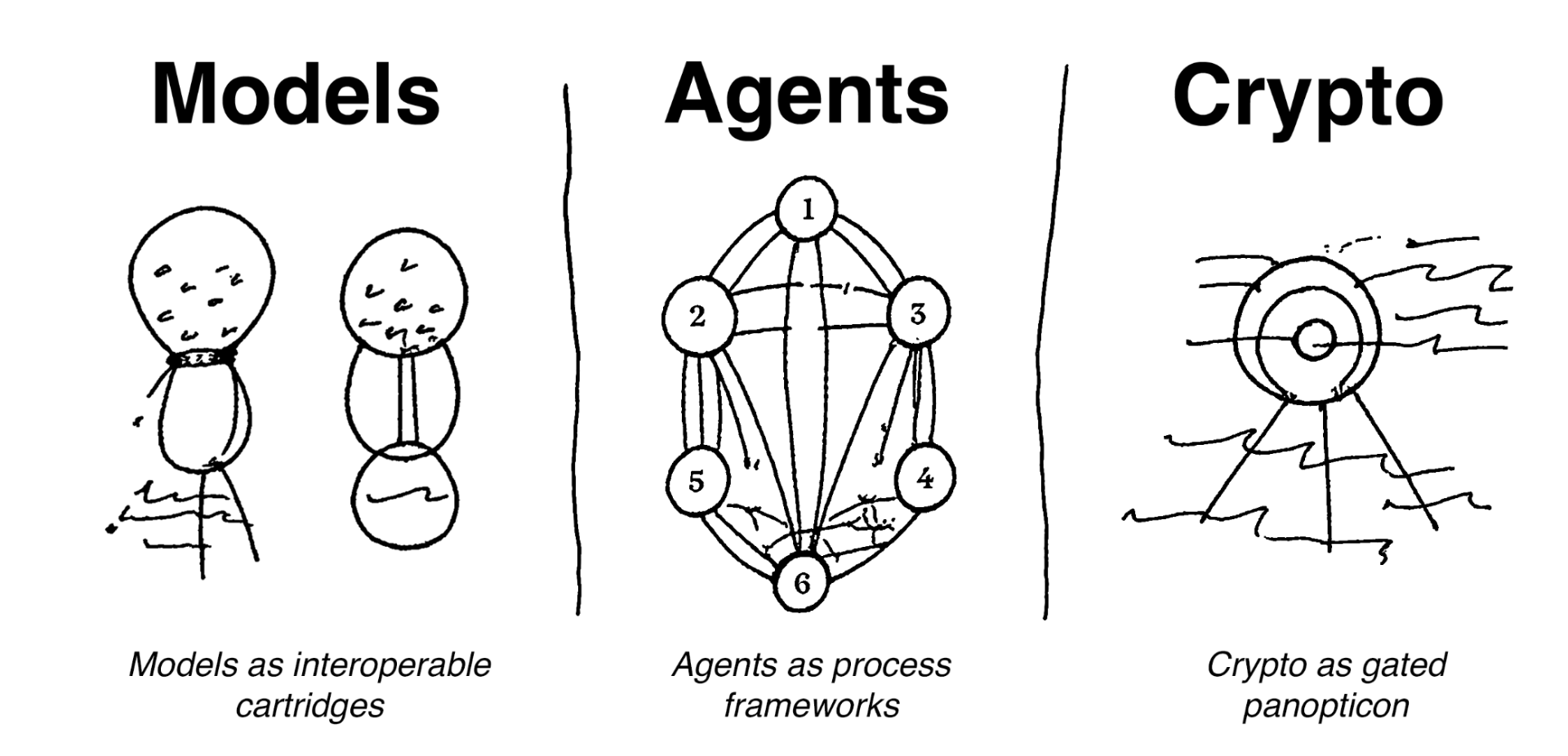

M-A-C Framework

M-A-C Framework: Models as interoperable cartridges | Agents as process frameworks | Crypto as gated panopticon

M-A-C Framework

M-A-C Framework: Models as interoperable cartridges | Agents as process frameworks | Crypto as gated panopticon

Our framework consists of three parts: Models, Agents, and Crypto.

Our goal is to remain flexible by leveraging these parts as composable systems that can be recombined, refreshed, and repurposed. We assume from the outset that models improve by the day, agential pipelines will change, and the utilization of cryptographic networks will radically change over time.

Models

Models are best thought of as modular abstraction systems. The models themselves are often seen as placeholders for autonomous entities or even minds, but model weights are more like hyper-dimensional punch cards or networks of routing tables.9 Most importantly, models follow scaling laws.10 Once a model is established, one can generally expect improvements according to a power-law relationship.

The DXRG goal is to build pipelines that use models as interoperable tools ready to be changed or upgraded as needed for the experiment at hand. As of writing this paper, two state-of-the-art, open-source video models have been released that are vast improvements over what was available merely one month earlier. Being able to rapidly adjust to these sequential improvements is critical.

Additionally, experiments that cannot be further scaled or do not provide a strong experience can simply be repeated with new, faster, or cheaper models at a later time.

This approach eliminates the flaw that leads to generative AI becoming slop. When raw model outputs are considered the epitome of AI creativity, Facebook meme bots and MoMA's new AI art collection begin to look the same.

Agents

Agents are assemblages of processes. The term agent re-entered the lexicon as a way to rebrand the bot for the LLM era. While the capabilities of agents are advanced, they remain a system of relationships and rules. Language models simply expand the aperture of what can be reliably automated. Also, when considering agents, these system designs can and often should include a human-in-the-loop.

In modular design, considering agential frameworks as systems helps eliminate the terminology sprawl that rapidly occurs. Memory systems, RAG approaches, and so on are helpful but can over-engineer relationships and obscure our ability to understand or control outcomes.

Ultimately the purpose of agential systems is to have them perform tasks at a speed or scale that frequently cannot be assessed without statistical methods. The experimental approach is critical here, as small changes to the prompt, context, or functions attached to the system can have radically different outcomes when looped for hundreds of thousands of steps. Again, until the experiment is run, we do not know the outcome.

We believe the goal of agential design is to create the frameworks that can create new possibilities in the world. In part, we hope to inspire others to think widely in this scope and beyond the anthropocentric model of agent as chatbot.

Crypto

Currently, crypto does not necessarily have a natural connection to AI.11 However, there are two properties of crypto that offset some inherent weaknesses in user interactions with AI: public visibility and secure gating. Both of these attributes add stakes to interactions with AI systems that might otherwise feel arbitrary.

For example, an AI system that takes in user prompts can require all interactions to be fully onchain. This gives a level of accountability, permanence, and public visibility to all interactions. Additionally, this can be used to more easily gate interactions than traditional systems. One of the common challenges of AI systems is the sense that interactions are arbitrary and nothing internal to the system changes due to these interactions. Secure visibility adds new vectors for engagement.

From a standpoint of provability of outputs, language models (and neural networks) are deterministic systems at their core – randomness is added via probabilistic sampling. Having the full documentation of inputs and outputs as well as rules for the system can provide assurance that a given system's outputs are valid.12 In the future, all of these attributes can be further enhanced as zero-knowledge (ZK) technologies develop.13

Ethereum's smart contracts remain the most compelling way to access these qualities of crypto for the benefit of AI experimentation. DXRG is agnostic in choosing between EVM chains. The choice between Ethereum mainnet or L2s will be based on the tradeoffs between cost, speed, and longevity that best fit the experiment. Additionally, the DXRG approach to experiments will utilize a wide range of output objects as participants' takeaways rather than always remaining wedded to a single form (such as ERC721 or ERC20).

Towards the Future

The synthesis of our approach is building interoperable frameworks to experiment with new potential products made possible by the intersection of AI and crypto. Our framework is flexible: we assume models get better, agential frameworks change, and crypto applications will vary by experiment.

DX Research Group intends to ensure that these experiments accelerate the cultural thinking around AI. The accumulation of knowledge, tooling, capabilities, and data will be made publicly available and understandable so others can build where we leave off. Additionally, analyzing and sharing the behavioral data on how participants engaged with different systems over time will be just as important as sharing the M-A-C experimental design. In each experiment, we will take the time to draw out relevant insights and data, publishing the findings where appropriate.

The ability to spin off successful enterprises via open source, community efforts, cooperatives, or other forms becomes a key strength of the research group approach.

DX01: SINGULARITY

Our first experiment, DX01: SINGULARITY, will occur on Dec 5, 2024 on Base. This will be the first opportunity to engage with our experiments and an initial step in an experience that we have been developing over the last year.

Follow our X for updates: https://x.com/dxrgai

DX01: SINGULARITY – Phase 1: A Shared Agential World Simulation

DX01: SINGULARITY – Phase 1: A Shared Agential World Simulation

DX01: SINGULARITY – Phase 1: A Shared Agential World Simulation

DX01: SINGULARITY – Phase 1: A Shared Agential World Simulation

Notes

Footnotes

-

The "bitter lesson" of leaning into computation's strengths is a relevant point here. (incompleteideas.net) ↩

-

"Attention economy" as introduced by Herbert A. Simon in Designing Organizations For An Information-Rich World. (Wikipedia) ↩

-

It is worth mentioning here there is some truth to Sam Altman's claim that a "one person unicorn" will be created in the future. ↩

-

Nomadic war machine here is a reference to Deleuze and Guattari's concept in One Thousand Plateaus – to put simply, an agile, territory defining machine that carves fresh meaning into the world. ↩

-

The letters 'DX' represent the multifaceted and abstract nature of machine learning; however, we often return to Dream eXpedition and the 'dx' of derivatives as two key interpretations. ↩

-

We also see early implementations of this type of structure with Mondragon's private node branching into cooperatives across various industries. Similarly, XEROX PARC and Project Cybersyn are two strong examples of the research group that were far ahead of their time. ↩

-

Nous Research has organically developed innovations in modeling, productivity, and creative systems. Websim, in particular, is a strong way of thinking about new experiences offered by LLMs inline with our goals. (nousresearch.com) ↩

-

Satoshi Nakamoto is the most world shattering example of this phenomenon. ↩

-

We make a point to not weigh in on philosophical debates in cognitive science purposefully. We are more interested in the Spinozan call of "What can the body do?" as a mode of inquiry – how the inherent qualities of the medium of model weights may be applied in various creative contexts. ↩

-

0x113d often references XEROX PARC's intuition about Moore's Law being the key insight in their ability to innovate so far into the future of personal computing. (x.com/0x113d) ↩

-

Many will debate this point. There have been good discussions from Vitalik with reasons why crypto and AI are a natural fit. Consider also that crypto is one place where bots are extremely successful and have a very clear purpose. (vitalik.eth.limo) ↩

-

The ability to do this can be limited by model design or lack of access to input variables. Generally, for open source models, logprobs can be used to determine if an output is valid given an input. ↩

-

ZK libraries already exist that can prove neural networks were run correctly. Computational difficulty is the current barrier to its broader difficulty, but progress is continually being made. (e.g. github.com/SafeAILab/zkDL) ↩